Albert Einstein once said: "If we knew what we were doing, it wouldn't be called 'research'!"

In what follows I'm simply showing what I've done, although I'm not showing everything I've done. I've fumbled around a lot trying to find sensible ways to extract meaning from morass. There have been some glimmers, but the problem is that the true meaning is not in the symbols themselves. I hope that comment makes sense either now, or in a while.

If you haven't yet, read these:

So here I am with over 100 routines that people have sent me, and I'm wondering what to do with them. I start to play a bit, compare directly with the ones I wrote, notice that several of the ones sent to me are quite similar to the ones I've written, but also notice that lots are different.

Very different.

So here are some questions:

... and so on. There are lots of questions, and I'll going to get into some of them later. For now, I'm going to have a look at some of the routines in more detail, and describe the techniques I've used to reduce my workload, and try to find out interesting things.

Well, I call it normalisation, but in reality I take a fingerprint of each routine, throwing away the irrelevant detail, and keeping (most of) the relevant form and structure. In particular:

For example, my compact version of the code:

void condense_by_removing(

char *z_terminated,

char char_to_remove

) {

char *p_read;

for (p_read = z_terminated;*p_read;p_read++)

if (*p_read != char_to_remove)

*z_terminated++ = *p_read;

*z_terminated = '\0';

}

... becomes ...

vxc*x,cx)c*x;fx=x;*x;xI)i*xNx)*xI=*x;*x=Z;}

| (Added in proof: I forgot to remove opening square brackets - that will change the analysis in later updates, but it doesn't make too much difference here.) |

So having fingerprinted all the routines, what now? The obvious thing is to find all pairwise distances, and who am I to avoid the obvious ...

Routine Length LD Ratio

1 2 1 2

g001.d g002.d 43 107 73 0.20930233

g001.d g003.d 43 53 23 0.30232558

g001.d g004.d 43 46 18 0.34883721

g001.d g005.d 43 45 20 0.41860465

g001.d g006.d 43 55 20 0.18604651

g001.d g007.d 43 58 21 0.13953488

g001.d g008.d 43 64 33 0.27906977

g001.d g009.d 43 50 20 0.30232558

g001.d g010.d 43 68 38 0.30232558

....

|

So now we have a line for every pair of routines compared. We have the

routine designations, the lengths of the fingerprints, the Levenshtein

Distance (LD), and a ratio of how big the distance is compared with the

minimum and maximum that it could be given the lengths.

The problem is we now have a matrix with some 100 rows and 100 columns, and just having the matrix doesn't really tell us much. We need to do some sort of cluster analysis so we can identify similar routines. |

|

So let's create a tree of associations as follows:

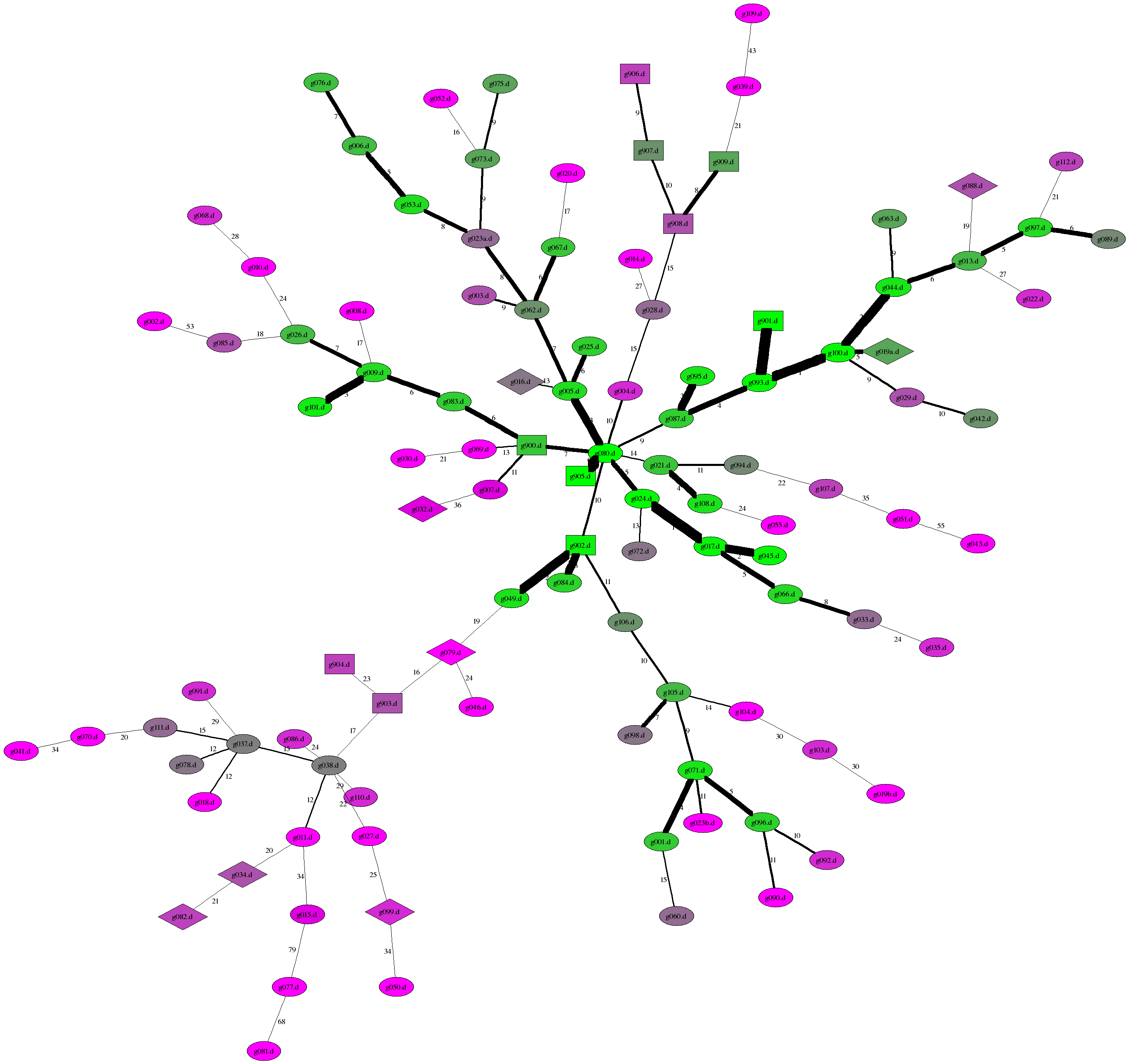

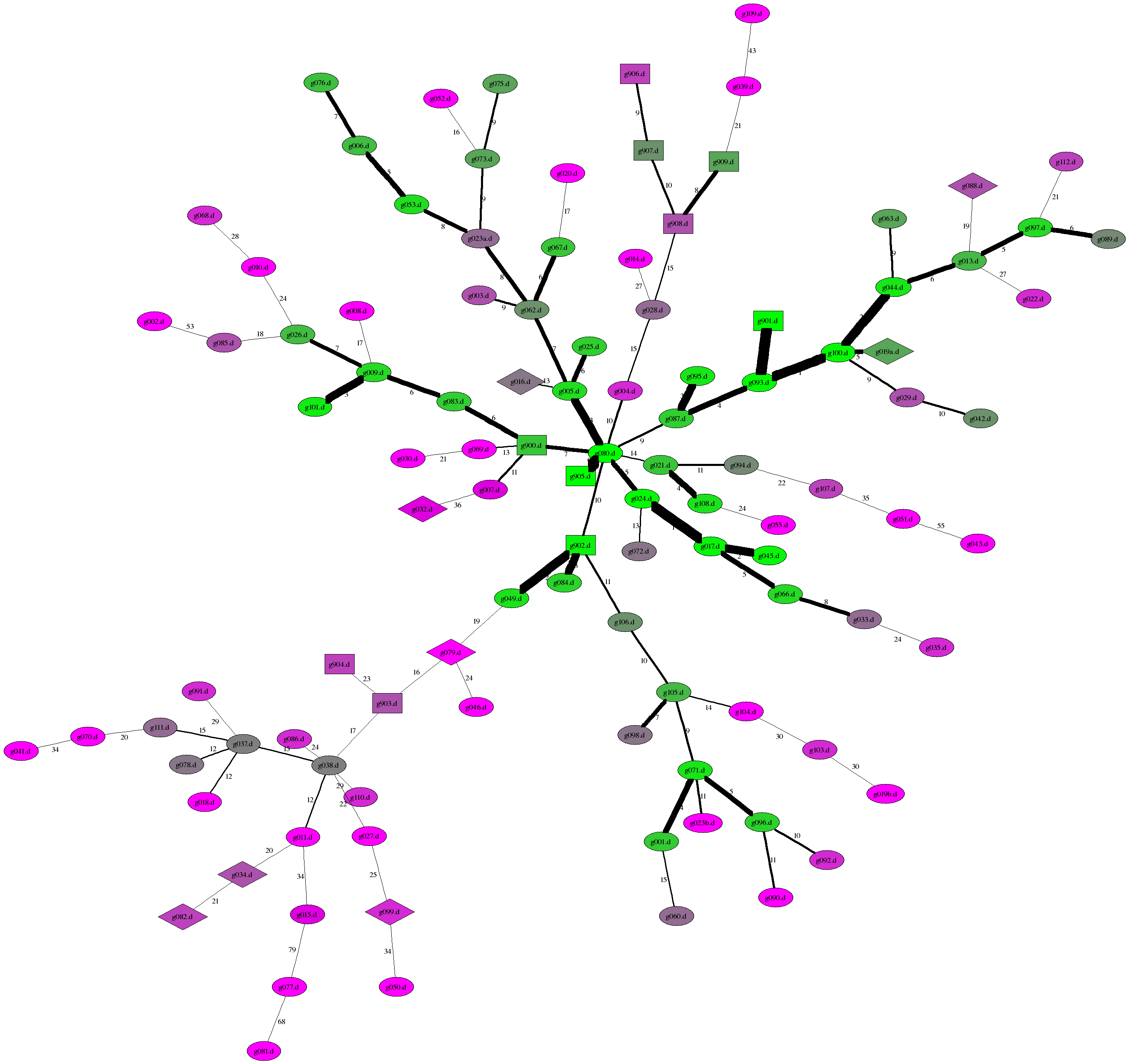

We can then use "neato" to render the resulting tree, using heavier lines for "more similar" nodes. Unfortunately, neato doesn't realise that this is a tree and frequently lays it out with unnecessarily crossing edges. So I wrote a short program that reads a "map" file looking for crossing edges, and rejected those layouts. By using the "-Gstart=XXX" parameter I could play with the initialisation of the layout, and then having found a setting that worked I could lay out the same graph several times with different renderings of the edges.

So, here's the result:

|

In this diagram routines that have been written as bases for comparison are

rendered as boxes, routines that failed the tests are in diamonds, and submitted

routines that passed the tests are ellipses.

The colors are vaguely related to the length of the shortest link to the node. Green nodes have a short link to another node, grey nodes middling links, and purple nodes are a long way from everyone. The Levenshtein Distance between the routines is shown as a label on the edge, inversely related to the thickness of the line, and is vaguely related to the length of the line. These are for indication only. The clumping is by no means convincing, although it is a start. We could do a few things to improve the "clumpiness" - we could include an extra edge here and there, or it might be enhanced if we were to find some outliers and write a routine that is a sort of amalgam of them to create a central node for a cluster, but we'll leave that idea for later. |

To help you find any given node, here are the coordinates for each routine.

These are X,Y as a proportion of the image, and Y=0 is at the top. For example,

g005.d has X=0.504 and Y=0.367, so it should be in the middle, about 1/3 of the

way down.

|

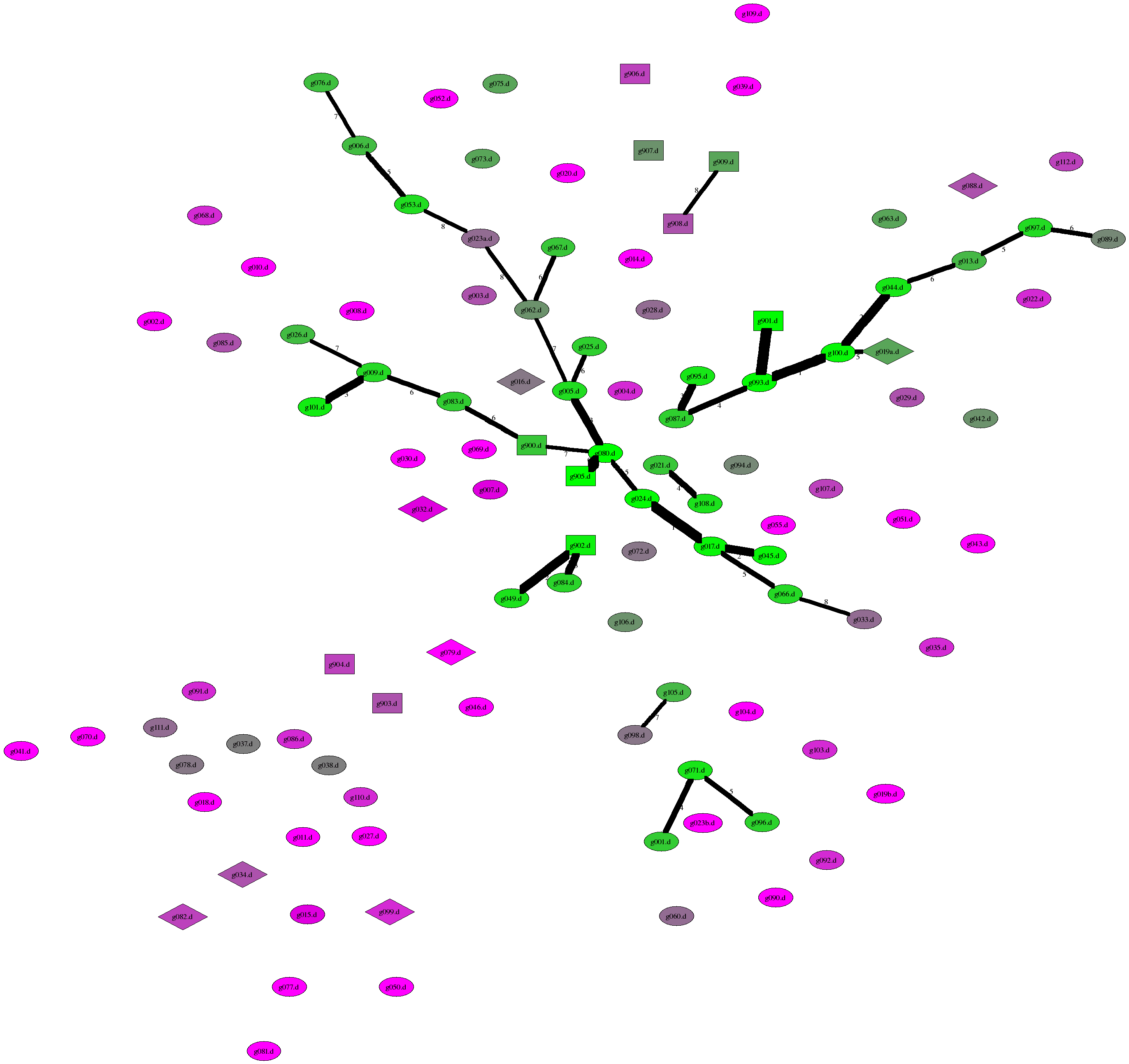

However, if we restrict the diagram to edges of length less than or equal to eight we get some clear "clumps" emerging

Here are the clusters:

g080.d g905.d g017.d g024.d g045.d

g005.d g009.d g101.d g006.d g053.d

g066.d g025.d g083.d g062.d g067.d

g900.d g076.d g026.d g023a.d g033.d

|

g093.d g901.d g100.d

g044.d g087.d g095.d

g013.d g097.d g019a.d

g089.d

|

g049.d

g902.d

g084.d

|

g001.d

g071.d

g096.d

|

g908.d g909.d

g098.d g105.d

g021.d g108.d

|

We can see that sprawling through our diagram is a main cluster. It seems to have a central few nodes with arms extending outwards.

So we'll start with one of those arms. Starting with the g076.d (at 0.284x0.077) and following the chain in towards comparison routine g905.d:

g076.d : vxc*x,cx)c*x;c*x;x=x=x;w*xNZ)w*xEx) xI;} *x =*x;xI;xI; }*x=*x;}

g006.d : vxc*x,cx)c*x =x;c*x=x;w*x )w*xEx) xI;} *x =*x;xI;xI; }*x=*x;}

g053.d : vxc*x,cx)c*x =x;c*x=x;w*x )i*xEx) xI;}e*x =*x;xI;xI;}}*x= Z;}

g023a.d : vxc*x,cx)c*x =x ,*x=x;w*x )i*xEx) xI;}e*xI=* xI;}}*x= Z;}

g062.d : vxc*x,cx)c* x=x;w*xNZ)i*xEx)*xI;}e*xI=* xI;}}*x= Z;}

g005.d : vxc*x,cx)c* x=x;w*xNZ)i*xNx) *xI=*x; *xI; }*x= Z;}

g080.d : vxc*x,cx)c* x=x;w*x )i*xNx) *xI=*x; xI; }*x= Z;}

With a rename of variables and some slight reformatting, here are routines g076.d and g053.d. The differences are slight, but noticeable:

void condense_by_removing( = void condense_by_removing(

char *z_terminated, = char *z_terminated,

char char_to_remove = char char_to_remove

) { = ) {

char * sp; | char *sp = z_terminated;

char * dp; | char *dp = z_terminated;

sp = dp = z_terminated; |

while (*sp != '\0') { | while (*sp) {

while (*sp == char_to_remove){ | if (*sp == char_to_remove) {

sp++; = sp++;

} = }

| else {

*dp = *sp; = *dp = *sp;

sp++; dp++; = sp++; dp++;

} = }

} = }

*dp = *sp; | *dp = '\0';

} = }

The initialisation of local variables is different, and a while loop is used in the left routine to step over consecutive instances of the character to remove, but otherwise the routines are very similar.

The other differences in this cluster are similar. Here's another chain from this cluster:

g026.d : vxc*x,cx)c*x,*x;x=x;x=x;w*x )i*xNx)*x =*x;xI;}xI;}*x=0;}

g101.d : vxc*x,cx)c*x,*x;x= x=x;w*x )i*xNx)*xI)=*xI) ;exI;}*x=0;}

g009.d : vxc*x,cx)c*x,*x;x= x=x;w*x )i*xNx)*xI =*xI ;exI; *x=0;}

g083.d : vxc*x,cx)c*x,*x;x= x=x;w*xNZ)i*xNx)*xI =*x ;}xI;}*x=Z;}

g900.d : vxc*x,cx)c*x =x; c*x=x;w*x )i*xNx)*xI =*x ;}xI;}*x=Z;}

Again, very small differences. Here are routines g026.d and g900.d:

void condense_by_removing( = void condense_by_removing(

char *str, = char *str,

char remove = char remove

) { = ) {

char *from, *to; | char *from = str;

from = str; | char *to = str;

to = str; |

while (*from) { = while (*from) {

if (*from != remove) { = if (*from != remove) {

*to = *from; | *to++ = *from;

to++; |

} = }

from++; = from++;

} = }

*to = 0; | *to = '\0';

} = }

The other major chain does have a surprise in it. Here we are coming in from g033.d:

g033.d : vxc*x,cx)c*x=x,*x=x;w*xNZ)i*xNx)ixNx)*x =*x;xI;}xI;}*x=Z;}

g066.d : vxc*x,cx)c *x=x;w*x )i*xNx)ixNx)*x =*x;xI;}xI;}*x=0;}

g045.d : vxc*x,cx)c *x=x;w*xN0)i* xNx)*x =*x;xI;}xI;}*x=0;}

g017.d : vxc*x,cx)c *x=x;w*x )i* xNx)*x =*x;xI;}xI;}*x=0;}

g024.d : vxc*x,cx)c *x=x;w*x )i* xNx)*x =*x;xI;}xI;}*x=Z;}

g080.d : vxc*x,cx)c *x=x;w*x )i* xNx)*xI=*x; xI;}*x=Z;}

Here's the comparison between g066.d and g024.d:

g066.d g024.d

====== ======

void condense_by_removing( = void condense_by_removing(

char *z_terminated, = char *z_terminated,

char char_to_remove = char char_to_remove

) { = ) {

char* next = z_terminated; = char *next = z_terminated;

while(*next) { = while (*next) {

if (*next != char_to_remove) { = if (*next != char_to_remove) {

if (next != z_terminated) |

*z_terminated = *next; = *z_terminated = *next;

z_terminated++; = z_terminated++;

} = }

next++; = next++;

} = }

*z_terminated = 0; = *z_terminated = '\0';

} = }

|

On

the

surface,

at

least.

It's

possible

that

the

additional

branch

actually

costs

more

than

the

copy,

especially

since

things

are

already

in

the

processor

cache,

but

branches

can

be

costly.

More about that later. |

Here is the other large cluster, which consists primarily of a single chain:

g089.d : vxc*x,cx)c*x =x;c*x=x;f ; *x ;xI)i*xN x)*xI =*x;}}*x=0;}

g097.d : vxc*x,cx)c*x,*x ;fx=x=x; *x ;xI)i*xN x)*xI =*x;}}*x=0;}

g013.d : vxc*x,cx)c*x,*x ;fx=x=x; *xNZ;xI)i*xN x)*xI =*x; *x=Z;}

g044.d : vxc*x,cx)c*x ;fx=x ; *xNZ;xI)i*xN x)*xI =*x; }*x=Z;}

g019a.d : vxc*x,cx)c*x ;fx=x ;!*x );xI)i*xN x)*xI)=*x;}}*x=0;}

g100.d : vxc*x,cx)c*x ;fx=x ; *x ;xI)i*xN x)*xI =*x; }*x=Z;}

g093.d : vxc*x,cx)c*x ;fx=x ; *x ;xI)i*xN x)*xI =*x; *x=Z;}

g901.d : vxc*x,cx)c*x ;fx=x ; *x ;xI)i*xN x)*xI =*x; *x=Z;}

g087.d : vxc*x,cx)c*x =x ;f ; *x ;xI)i*xN x)*xI =*x; *x=Z;}

g095.d : vxc*x,cx)c*x =x ;f ; *x ;xI)i xN*x)*xI =*x; }*x=Z;}

Here there are far fewer surprises. The main difference between this cluster and the previous is that these routines use a for loop, while the others use a while loop. Again the number of local variables and where they are initialised varies slightly, but that's the main difference between these routines.

Here are the routines in the other clusters compared with each other:

g084.d : vxc*x,cx)c*x=x;di*xNx)*xI)=*x;}}w*xI ));}

g902.d : vxc*x,cx)c*x=x;di*xNx)*xI =*x; }w*xI );}

g049.d : vxc*x,cx)c*x=x;di*xNx)*xI =*x; }w*xIN0);}

======

g096.d : vxc*x,cx)c*x,*x;x=x=x;w*x=*xI )i*xNx)xI;}}

g071.d : vxc*x,cx)c*x =x;c*x=x;w*x=*xI )i*xNx)xI; }

g001.d : vxc*x,cx)c*x =x;c*x=x;w*x=*xI)N0)i*xNx)xI;}}

======

g909.d : vxc*x,cx)c*x;w*xNZ)A*xNx))xI;i*xEZ)r;x=x;di*xNx)*xI=*x;}w*xI);}

g908.d : vxc*x,cx)c*x;w*xNZ)A*xNx))xI ;x=x;di*xNx)*xI=*x;}w*xI);}

======

g108.d : vxc*x,cx)ix=0;w*x)i*xEx)xI;}e*x-x)=*x;}xI;}*x-x)=*x;}

g021.d : vxc*x,cx)ix=0;w*x)i*xEx)xI; e*x-x)=*x; xI;}*x-x)= Z;}

======

g105.d : vxc*x,cx) c*x=x;w*x=*xI))i*xNx)Ix;}

g098.d : vxc*x,cx)fc*x=x; *x=*xI;xA*xNx) ;}

Of these perhaps the stand-out routine is g098.d, because it seems not to have an "if". Reformatting it somewhat we have this:

void condense_by_removing(char*A, char B) {

for ( char *J=A ; *J=*A++ ; J += *J!=B )

;

}

It's a clever routine, and fits on a single line in its original formatting. It's certainly "correct," and we'll look at the issues it raises later.

So of the routines submitted, how many have we examined?

Excluding those in languages other than C there were 98 routines submitted in total. Here are the ones in those clusters we've examined:

g001.d g005.d g006.d g009.d g013.d g017.d

g019a.d g021.d g023a.d g024.d g026.d g033.d

g044.d g045.d g049.d g053.d g062.d g066.d

g071.d g076.d g080.d g083.d g084.d g087.d

g089.d g093.d g095.d g096.d g097.d g098.d

g100.d g101.d g105.d g108.d

That's just 34 of them. What about the others?

As a next stage of investigation I looked for all those routines whose closest connection was with one already analysed. Then I compared them. For example, I've already analysed g062.d, and have found that g003.d has g062.d as its closest routine, so here is the comparison:

g062.d : vxc*x,cx)c*x=x;w*xNZ)i*xEx) *xI;}e*xI=*xI;}}*x = Z;}

g003.d : vxc*x,cx)c*x=x;w*xNZ)i*xEx)*x=*xI; e*xI=*xI; }*xI=*xI;}

Very similar, with small structural differences. Likewise these two:

g900.d : vxc*x,cx)c*x=x;c*x=x;w*x)i*xNx)*xI=*x; }x I;}*x=Z;}

g069.d : vxc*x,cx)c*x=x;c*x=x;f;;)i*xNx)*xI=*x;i*xEZ)b;xI;}}

Others are completely wacky:

g010.d : Xvxc*x,cx)c*x;xx;x=xx);fx=x; *x;)i*xEx)xx,x+1,x-x-x));xD;}exI;}*x=Z;}

g026.d : vxc*x,cx)c*x,*x;x= x ; x=x;w*x )i*xNx) *x =*x ;xI;} xI;}*x=0;}

==> g010.d <== | ==> g026.d <==

#include "../condense.h" |

void condense_by_removing( = void condense_by_removing(

char *str, = char *str,

char remove = char remove

) { = ) {

char *p; | char *from, *to;

size_t len; | from = str;

len = strlen(str); | to = str;

for(p = str; *p;) { | while (*from) {

if(*p == remove) { | if (*from != remove) {

memmove(p, p + 1, len - (p - str)); | *to = *from;

len--; | to++;

} = }

else |

p++; | from++;

} = }

*p = '\0'; | *to = 0;

} = }

Nope, not really at all similar.

So now we have a problem - what should we do next?

The problem really boils down to what we want to achieve. If we really want a complete network showing which routines are similar to which others then it's a long job, and I'm not entirely sure it's all that enlightening.

So I won't do that. I do have a few ideas about what I should do next, but I'm still working on them. After all, as Einstein said:

"If we knew what we were doing, it wouldn't be called 'research'!"

In particular, I've identified different structural aspects that appear in some code and not others, and I'm looking to see if I can automatically classify each routine according to those features that occur. If I can't do it automatically then I'll have to do it by hand.

Secondly, I already have in hand a similar analysis to that above but using the output of gcc -O3 -S to identify routines that are fundamentally identical. That's produced some interesting results.

Finally, though, I'll explain my opinions about readability, maintainability, and why some routines are intrinsically "better" than others. That's likely to provoke some discussion.

We'll see ...

|

During the process of looking at the routines I found one that appeared to have a bug, but which passed my tests. I quickly discovered that my tests were inadequate. Not really a surprise as I had written them quickly and simply intended them to be "good enough."

However, shown here at right is the routine I was using to test the routines I was sent (after checking them to make sure they had no malicious code). I've removed some of the code that prints sensible messages when it discovers a routine has a bug, and I've otherwise tightened it a little, but it's largely unchanged.

You might find it amusing to find what's wrong with it, and to devise a sensible looking routine that passes the tests, but which is nevertheless wrong.

But please, don't send it to me. I already have one.